Example and Use Case 2 – Data Orchestration Techniques

By Sheila Simpson / November 8, 2021 / No Comments / Amazon AWS Exams, Azure and AWS, Azure Synapse and Its ETL Features, Microsoft Exams

Add Data Flow

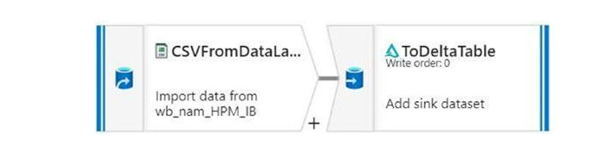

Figure 5-16 illustrates a Dataflow activity, an advanced feature in Data Factory that facilitates both data transformation and advanced capabilities, including Delta table loading.

Figure 5-16. Azure Data Factory data flow activity

• Within Azure Data Factory, go to the Authoring section and select “Data Flows.”

• Click on “Create data flow” and provide a name for your data flow.

• Select the source dataset that contains the data you want to load into the delta lake.

• Configure the source dataset by selecting the appropriate file format and defining the schema.

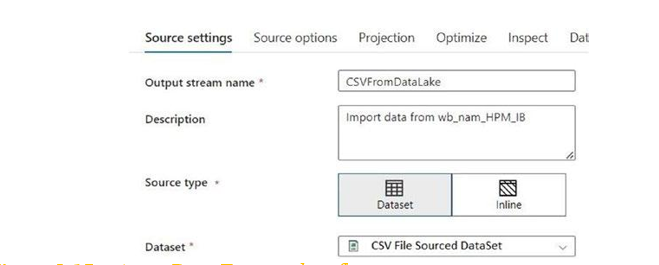

The figure below, labeled 5-17, outlines the interface for configuring settings related to a datasource within a Dataflow.

Figure 5-17. Azure Data Factory data flow source settings

• Specify the connection details for the data source, such as Azure Blob storage, Azure Data Lake Storage, or any other supported storage service.

• Within the data flow designer, add transformations to manipulate and shape the data.

• Use transformations such as Select, Filter, Aggregate, Join, and Derived Column to transform the data as needed.

• Apply any additional data cleansing or enrichment operations required for your use case.

• Add a sink transformation to define the destination of the data flow.

• Select the Delta Lake format as the sink type.

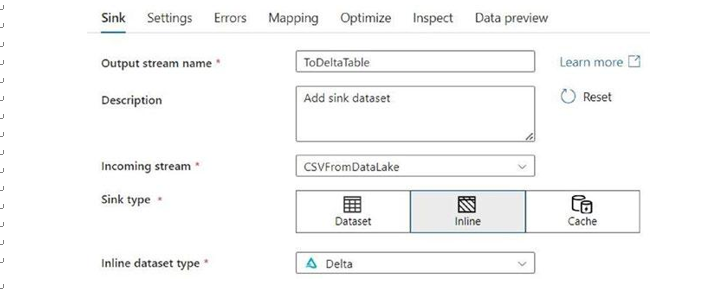

The figure labeled 5-18 delineates the interface for configuring settings associated with a data destination “sink” within a Dataflow. When loading data from the source into a Delta table, please ensure that the Inline dataset type is set to Delta.

Figure 5-18. Azure Data Factory data flow sink settings, note the delta format in inline dataset type

• Specify the output folder or container where the Delta Lake files will be stored.

• Configure the sink dataset, including the connection details for the storage service.

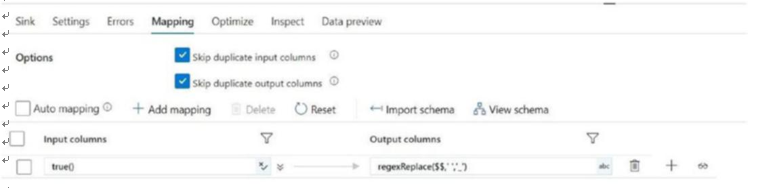

• Choose delta table format as an option, remember to remove extra spaces from columns with tools like regex for all input columns; e.g., [regexReplace($$,’ ‘,’_’)]

The diagram marked as 5-19 illustrates a standard column cleaning procedure necessary when a column contains spaces that need to be replaced with underscores. The Delta table processor, as of the current version during the writing of this book, does not support spaces in column names for Delta protocol versions below 2.5.

Figure 5-19. Azure Data Factory mapping setting for cleaning spaces in all the input column at once

• Once the data flow is designed, publish it to save the changes.

• Create a pipeline within Azure Data Factory that incorporates the data flow.

• Configure the pipeline with appropriate scheduling or trigger settings.

• Execute the pipeline to run the data flow and create/update delta lake.

Publish and Execute the Pipeline

• Once the pipeline is designed, publish it to save the changes.

• Configure the pipeline with appropriate scheduling or trigger settings.

• Execute the pipeline to start the file processing and loading of data into the delta table.

Monitor and Validate

• Monitor the execution of the pipeline using Azure Data Factory’s monitoring capabilities.

• Validate the results by checking the delta table to ensure the processed data is correctly loaded.

• You can also use Azure Data Factory’s logging and error handling features to identify and troubleshoot any issues during file processing.

By following these steps, you can leverage Azure Data Factory to process files and load the data into delta tables. Azure Data Factory provides a scalable and reliable platform for managing file processing tasks and integrating with delta lakes for efficient data storage and processing.

In this chapter we have explained multiple options, like data lake, data lakehouse and data mesh, that can be used to create an enterprise data warehouse. The topic here describes the need to cater to different aspects when building an enterprise data warehouse.